"AI, as we all know, is a powerful tool. AI has already replaced jobs in data entry, basic customer service roles, and bookkeeping. But what if I told you that AI could take on the role of a therapist? Well, it already has. But the real question is, how efficient is it in replacing a human, and how comfortable are people with opening up to a bot?" --Ruby Deetz, 7th grade

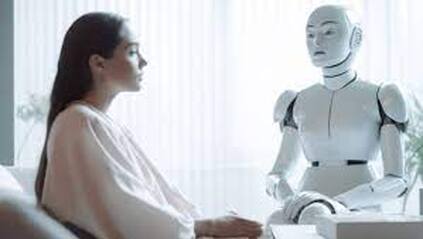

AI, as we all know, is a powerful tool. AI has already replaced jobs in data entry, basic customer service roles, and bookkeeping. But what if I told you that AI could take on the role of a therapist? Well, it already has. But the real question is, how efficient is it in replacing a human, and how comfortable are people with opening up to a bot?

Many people, especially teens, have turned to AI for mental health support rather than a licensed professional. Hannah Adams, from the middle school Theater Department, said that she uses AI for therapy. “I use it because a bot will be honest with you while real life people tend to sugar-coat things.” Adams thinks that when she talks to her friends about her struggles, they comfort her and don’t really help her with the issue at hand, however AI gives her actual solutions that help her.

One thing commonly heard is that AI is going to replace therapists, but Alison Darcy, founder of Woebot Health, the company behind the chatbot of the same name, said that isn’t their intention. According to The Washington Post, Darcy said that there should be a bigger discussion about what technology can accomplish differently to “engage people in ways and at times that clinicians can’t.”

In an article by AARP, there were more people advocating for the use of AI therapy bots. “I don’t think [AIs] are ever going to replace therapy or the role that a therapist can play,” says C. Vaile Wright, Senior Director of the Office of Health Care Innovation at the American Psychological Association in Washington, D.C. “But I think they can fill a need … reminding somebody to engage in coping skills if they’re feeling lonely or sad or stressed.”

However, later in the article, Wright said, “The problem with this space is it’s completely unregulated, there’s no oversight body ensuring that these AI products are safe or effective.”

There are many apps run by AI that people can turn to for therapy such as Wysa, Misü,Youper, Sanvello, etc. But the one AI therapy app that’s most popular is Happify. “Happify is a popular mental health app that uses AI to provide fun activities and games designed to reduce stress and make you feel better. It uses your mental health information to give you personalized support. You can use it anytime to improve your mood and overall well-being,” says Manuel Alejandro Patiño, author of the article 8 Best AI Apps for Mental Health. According to an article by One Mind PsyberGuide, Happify gathers mental health data through a questionnaire, “Users create an account and answer a short questionnaire which helps the app suggest a “track” for the user. “Tracks” are groups of activities and games which help the user achieve their goals”Happify gathers this information

“I think it’s a good thing that AI is being used in the [mental health] industry,” says 7th grader Izadora Abell from the Theater department. “I think it’s good that we’re finding ways to make therapy more accessible and at a lower cost.” But despite Abell’s opinion on this, she says that she would not use it because she needs a “human-to-human connection.”

Not only does AI therapy make it more accessible to people, it could help with the therapist shortage. According to a federal Substance Abuse and Mental Health Services Administration (SAMHSA) annual survey, roughly one in every five adults in the United States sought care for a mental health condition in 2021, yet more than a quarter felt they did not receive the help they needed. About 15% of people 50 and older requested those services, and one-seventh of those 15% felt they did not receive what they needed.

But, of course, there are people who are skeptical about the ability of robots to read or respond appropriately to the entire range of human emotion—and the potential issues when this method fails. Controversy flared up on social media recently over a canceled experiment involving chatbot-assisted therapeutic messages. A company called Koko used AI to help craft mental health support for about 4,000 of its users in October. However, when the CEO took to Twitter to discuss the experiment, which users were actually happy with, things went haywire.

“Morris posted a thread to Twitter about the test that implied users didn’t understand an AI was involved in their care. He tweeted that ‘once people learned the messages were co-created by a machine, it didn’t work.’ The tweet caused an uproar on Twitter about the ethics of Koko’s research…Morris said these words caused a misunderstanding: the ‘people’ in this context were himself and his team, not unwitting users. Koko users knew the messages were co-written by a bot, and they weren’t chatting directly with the AI, he said,” Gizmo reported.

"The hype and promise is way ahead of the research that shows its effectiveness," Serife Tekin said, a philosophy professor and mental health ethics expert at the University of Texas at San Antonio. "Algorithms are still not at a point where they can mimic the complexities of human emotion, let alone emulate empathetic care," she said in an article for AARP.

Tekin believes there is a possibility that teenagers, for example, will try AI-driven therapy, find it insufficient and then refuse to participate in therapy with a human being. "My worry is they will turn away from other mental health interventions saying, 'Oh well, I already tried this and it didn't work,'" she said.

The effect of AI therapy seems to be different from person-to-person and a very controversial topic amongst people. Some people love it, some people hate just the idea of talking to a bot about their struggles. But how AI will become a part of our lives and continue to assume the roles of professionals, regardless of the industry, is still to be determined.

Many people, especially teens, have turned to AI for mental health support rather than a licensed professional. Hannah Adams, from the middle school Theater Department, said that she uses AI for therapy. “I use it because a bot will be honest with you while real life people tend to sugar-coat things.” Adams thinks that when she talks to her friends about her struggles, they comfort her and don’t really help her with the issue at hand, however AI gives her actual solutions that help her.

One thing commonly heard is that AI is going to replace therapists, but Alison Darcy, founder of Woebot Health, the company behind the chatbot of the same name, said that isn’t their intention. According to The Washington Post, Darcy said that there should be a bigger discussion about what technology can accomplish differently to “engage people in ways and at times that clinicians can’t.”

In an article by AARP, there were more people advocating for the use of AI therapy bots. “I don’t think [AIs] are ever going to replace therapy or the role that a therapist can play,” says C. Vaile Wright, Senior Director of the Office of Health Care Innovation at the American Psychological Association in Washington, D.C. “But I think they can fill a need … reminding somebody to engage in coping skills if they’re feeling lonely or sad or stressed.”

However, later in the article, Wright said, “The problem with this space is it’s completely unregulated, there’s no oversight body ensuring that these AI products are safe or effective.”

There are many apps run by AI that people can turn to for therapy such as Wysa, Misü,Youper, Sanvello, etc. But the one AI therapy app that’s most popular is Happify. “Happify is a popular mental health app that uses AI to provide fun activities and games designed to reduce stress and make you feel better. It uses your mental health information to give you personalized support. You can use it anytime to improve your mood and overall well-being,” says Manuel Alejandro Patiño, author of the article 8 Best AI Apps for Mental Health. According to an article by One Mind PsyberGuide, Happify gathers mental health data through a questionnaire, “Users create an account and answer a short questionnaire which helps the app suggest a “track” for the user. “Tracks” are groups of activities and games which help the user achieve their goals”Happify gathers this information

“I think it’s a good thing that AI is being used in the [mental health] industry,” says 7th grader Izadora Abell from the Theater department. “I think it’s good that we’re finding ways to make therapy more accessible and at a lower cost.” But despite Abell’s opinion on this, she says that she would not use it because she needs a “human-to-human connection.”

Not only does AI therapy make it more accessible to people, it could help with the therapist shortage. According to a federal Substance Abuse and Mental Health Services Administration (SAMHSA) annual survey, roughly one in every five adults in the United States sought care for a mental health condition in 2021, yet more than a quarter felt they did not receive the help they needed. About 15% of people 50 and older requested those services, and one-seventh of those 15% felt they did not receive what they needed.

But, of course, there are people who are skeptical about the ability of robots to read or respond appropriately to the entire range of human emotion—and the potential issues when this method fails. Controversy flared up on social media recently over a canceled experiment involving chatbot-assisted therapeutic messages. A company called Koko used AI to help craft mental health support for about 4,000 of its users in October. However, when the CEO took to Twitter to discuss the experiment, which users were actually happy with, things went haywire.

“Morris posted a thread to Twitter about the test that implied users didn’t understand an AI was involved in their care. He tweeted that ‘once people learned the messages were co-created by a machine, it didn’t work.’ The tweet caused an uproar on Twitter about the ethics of Koko’s research…Morris said these words caused a misunderstanding: the ‘people’ in this context were himself and his team, not unwitting users. Koko users knew the messages were co-written by a bot, and they weren’t chatting directly with the AI, he said,” Gizmo reported.

"The hype and promise is way ahead of the research that shows its effectiveness," Serife Tekin said, a philosophy professor and mental health ethics expert at the University of Texas at San Antonio. "Algorithms are still not at a point where they can mimic the complexities of human emotion, let alone emulate empathetic care," she said in an article for AARP.

Tekin believes there is a possibility that teenagers, for example, will try AI-driven therapy, find it insufficient and then refuse to participate in therapy with a human being. "My worry is they will turn away from other mental health interventions saying, 'Oh well, I already tried this and it didn't work,'" she said.

The effect of AI therapy seems to be different from person-to-person and a very controversial topic amongst people. Some people love it, some people hate just the idea of talking to a bot about their struggles. But how AI will become a part of our lives and continue to assume the roles of professionals, regardless of the industry, is still to be determined.