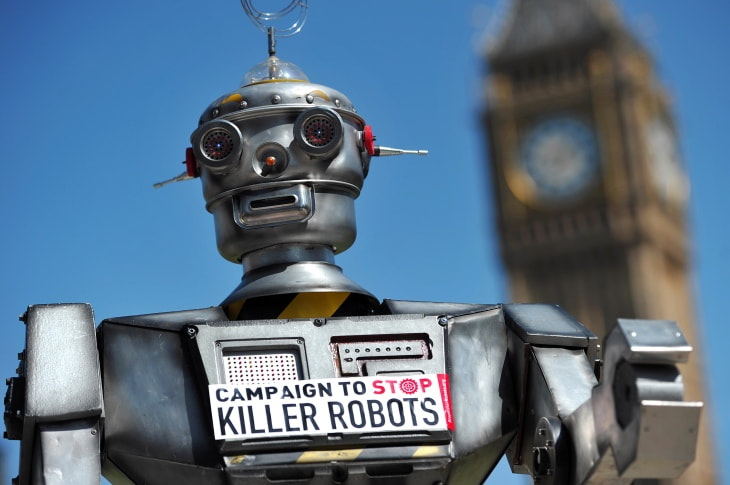

"Last month, the city of San Francisco voted to allow their police to use killer robots, okaying a policy so dystopian one might think it was pulled from the pages of Orwell's 1984. In this proposal, the SFPD would have weaponized their current arsenal of 12 remote-controlled surveillance robots, which would give them the ability to use lethal force in extreme circumstances," -- Ava Rukavina, 10th Grade

Thanks to citizens voicing their concerns online and protesting outside of San Francisco City Hall, the decision was reconsidered, and unanimously voted against at a second meeting. Still, the implications that this proposal has for the future of policing in America are far more deadly than any android.

This policy and the board meetings in which it was discussed has revived a political debate which started in 2016, when Dallas police used a ground robot to kill an active shooter, and whether or not it is ethical to weaponize robots. Many make the case that in extreme situations officers could risk great bodily harm or even death if they intervene; in the Dallas shooting, five officers died before the shooter was killed. Perhaps if the armed robot had intervened earlier, casualties could have been prevented.

Others caution against arming robots – several leading companies in the robotics industry recently published a letter to advocate against the weaponization of robots arguing that “the emergence of advanced mobile robots offers the possibility of misuse.” If police departments in America are granted the right to use weaponized robots, their power will almost certainly be abused as is so often the case within the police system. The existing weaponry that police carry already poses a threat to innocent citizens, especially in black communities. It’s no secret that American law enforcement has a history of abusing power – according to a government study, lethal force by law enforcement, “account[s] for approximately 1% of all violent deaths in the U.S. each year and 4% of all homicides,” and victims were “disproportionately black… with a fatality rate 2.8 times higher.”

Thus far, all incidents of robots using lethal force, like that in Dallas, have been remote-controlled. For now, the power to use lethal force against a person is still technically in the hands of the officers who control the robots, but it’s not long before the robots could be making those decisions themselves.

Artificial intelligence is advancing rapidly and already being integrated into many different institutions. The algorithms of search engines and social media rely on machine learning to function, and some occupations like hotel room service and food delivery have already been taken over by machines. Now, it is beginning to be integrated into law enforcement in some places around the world. In 2016 at a technology fair in China, the AnBot made its debut, a 1.5 meter-tall patrol robot capable of responding to emergencies, chasing criminals, and their most controversial feature, the ability to tase potential suspects. The following year, Dubai’s first robot officer, a humanoid policeman that reports crimes, collects traffic data, and issues fines, joined the force. Officials say that by 2030, they are hoping that 25% of Dubai’s police will be robotic.

Thankfully, neither of these robocops have deadly weapons equipped, but given that the technology for weaponized robots and AI officers already exist, how long is it before the two are combined? Perhaps artificial intelligence does have the capacity to perform less nuanced tasks such as partoling and handling crimes with lower stakes, but we certainly shouldn’t allow a machine to hold a human being’s life in its hands.

These potentially automated officers are incredibly dangerous, because when you have a machine that has been trained off of existing data, the flaws in our systems are amplified. With the potential introduction of AI in law enforcement, America’s problem of police violence on black communities would only worsen. In a study by Johns Hopkins University, researchers repeatedly showed images of people to a popular artificial intelligence algorithm and asked it to select which one was the criminal. The robots repeatedly chose black men.

This is not the only case of AI showing racial bias either – it pops up in study after study about the ethics of artificial intelligence. In 2016, Microsoft launched a chatbot named Tay, which would use machine learning algorithms to interact with users on Twitter. The account had to be taken down after it began sprouting racist comments in less than a day. There are many factors that can lead to this racial bias in artificial intelligence, but namely that “these systems are often trained on images of predominantly light-skinned men,” author Joy Buolamwini explains in her Time article. The result of this is the program labeling people of color as foreign and threatening.

Ultimately, the proposal to allow police robots to use lethal force against citizens opens up a whole window of dystopian possibilities. This technology has the capacity to increase the number of casualties that police cause while on the job, especially in black communities, and in the future, could potentially be accompanied by racist artificial intelligence algorithms. The most important thing to keep in mind though, is that we are not powerless against the government. It was the protests of the people that kept deadly robots off San Francisco’s city streets, and it will be the protests of the people that contribute to a more humane and just society.

This policy and the board meetings in which it was discussed has revived a political debate which started in 2016, when Dallas police used a ground robot to kill an active shooter, and whether or not it is ethical to weaponize robots. Many make the case that in extreme situations officers could risk great bodily harm or even death if they intervene; in the Dallas shooting, five officers died before the shooter was killed. Perhaps if the armed robot had intervened earlier, casualties could have been prevented.

Others caution against arming robots – several leading companies in the robotics industry recently published a letter to advocate against the weaponization of robots arguing that “the emergence of advanced mobile robots offers the possibility of misuse.” If police departments in America are granted the right to use weaponized robots, their power will almost certainly be abused as is so often the case within the police system. The existing weaponry that police carry already poses a threat to innocent citizens, especially in black communities. It’s no secret that American law enforcement has a history of abusing power – according to a government study, lethal force by law enforcement, “account[s] for approximately 1% of all violent deaths in the U.S. each year and 4% of all homicides,” and victims were “disproportionately black… with a fatality rate 2.8 times higher.”

Thus far, all incidents of robots using lethal force, like that in Dallas, have been remote-controlled. For now, the power to use lethal force against a person is still technically in the hands of the officers who control the robots, but it’s not long before the robots could be making those decisions themselves.

Artificial intelligence is advancing rapidly and already being integrated into many different institutions. The algorithms of search engines and social media rely on machine learning to function, and some occupations like hotel room service and food delivery have already been taken over by machines. Now, it is beginning to be integrated into law enforcement in some places around the world. In 2016 at a technology fair in China, the AnBot made its debut, a 1.5 meter-tall patrol robot capable of responding to emergencies, chasing criminals, and their most controversial feature, the ability to tase potential suspects. The following year, Dubai’s first robot officer, a humanoid policeman that reports crimes, collects traffic data, and issues fines, joined the force. Officials say that by 2030, they are hoping that 25% of Dubai’s police will be robotic.

Thankfully, neither of these robocops have deadly weapons equipped, but given that the technology for weaponized robots and AI officers already exist, how long is it before the two are combined? Perhaps artificial intelligence does have the capacity to perform less nuanced tasks such as partoling and handling crimes with lower stakes, but we certainly shouldn’t allow a machine to hold a human being’s life in its hands.

These potentially automated officers are incredibly dangerous, because when you have a machine that has been trained off of existing data, the flaws in our systems are amplified. With the potential introduction of AI in law enforcement, America’s problem of police violence on black communities would only worsen. In a study by Johns Hopkins University, researchers repeatedly showed images of people to a popular artificial intelligence algorithm and asked it to select which one was the criminal. The robots repeatedly chose black men.

This is not the only case of AI showing racial bias either – it pops up in study after study about the ethics of artificial intelligence. In 2016, Microsoft launched a chatbot named Tay, which would use machine learning algorithms to interact with users on Twitter. The account had to be taken down after it began sprouting racist comments in less than a day. There are many factors that can lead to this racial bias in artificial intelligence, but namely that “these systems are often trained on images of predominantly light-skinned men,” author Joy Buolamwini explains in her Time article. The result of this is the program labeling people of color as foreign and threatening.

Ultimately, the proposal to allow police robots to use lethal force against citizens opens up a whole window of dystopian possibilities. This technology has the capacity to increase the number of casualties that police cause while on the job, especially in black communities, and in the future, could potentially be accompanied by racist artificial intelligence algorithms. The most important thing to keep in mind though, is that we are not powerless against the government. It was the protests of the people that kept deadly robots off San Francisco’s city streets, and it will be the protests of the people that contribute to a more humane and just society.